Main / Back Propagation

Readings

Rumelhart, D. E., Hinton, G. E., and Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323, 533-536.

- Paul J. Werbos invented the back-propagation algorithm in 1974 (Harvard doctoral thesis).

- Back-propagation was independently rediscovered in the early 1980s by David Rumelhat and David Parker.

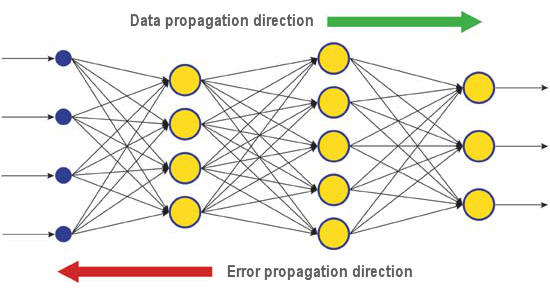

Principles

https://c.mql5.com/2/12/Fig6_Error_backpropagation.png

- Backprop learning requires a training set for teaching the network. The training set contains a set of inputs and the corresponding correct outputs. Some of the training set might be set aside for testing later on.

- When each input is given to the network, the difference between the actual output and the correct output is used to adjust the weights of the network connections according to a general learning formula.

- The process is repeated many times until the network is able to "learn the task" = for each input, give an output that is close enough to the correct answer.

- Sometimes the network might not be able to learn the task. It might be necessary to change the architecture of the network by adjust the number of hidden units and the connections.

- When the network has learnt the task, the data from the training set that was set aside might be used to see if the network is able to deal with inputs it has not encountered before.

Biological implausibility

Backpropagation is very common in training neural networks, but it is unlikely to be the main learning algorithm used by the brain:

- Backprop is supervsied learning with correct answers given. Many tasks are learnt without supervision.

- Backprop is computationally intensive and slow, especially with big networks.

- It requires long-range backward neural connections for weight adjustments.