[T05] Probability and utility

Module: Basic statistics

- T00. Introduction

- T01. Basic concepts

- T02. The rules of probability

- T03. The game show puzzle

- T04. Expected values

- T05. Probability and utility

- T06. Cooperation

- T07. Summarizing data

- T08. Samples and biases

- T09. Sampling error

- T10. Hypothesis testing

- T11. Correlation

- T12. Simpson's paradox

- T13. The post hoc fallacy

- T14. Controlled trials

- T15. Bayesian confirmation

Quote of the page

Anyone who stops learning is old, whether at twenty or eighty. Anyone who keeps learning stays young.

- Henry Ford

Help us promote

critical thinking!

Popular pages

- What is critical thinking?

- What is logic?

- Hardest logic puzzle ever

- Free miniguide

- What is an argument?

- Knights and knaves puzzles

- Logic puzzles

- What is a good argument?

- Improving critical thinking

- Analogical arguments

§1. Utility

Most risks don't involve money--at least, not directly. For example, if you are worrying about the possible side-effects of a particular medication, your worry is not primarily about money. If we are going to be able to apply the concept of expected value to such contexts, we will need a way of comparing outcomes in terms which are not financial.

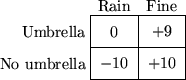

The way to do this is via the concept of utility. The utility of an outcome provides a numerical measure of how good or bad the outcome is. To take a trivial example, suppose I am trying to decide whether to take my umbrella with me today. There are four possible outcomes, because it may or may not rain, and I may or may not take my umbrella. If it rains, it is clearly better to take my umbrella than not, but if the weather is fine, then it is marginally better not to take my umbrella, so that I have fewer things to carry. The best outcome for me is if it's fine and I don't take my umbrella, so I'll assign that outcome a utility of +10. The outcome in which it's fine and I do take my umbrella is only marginally worse than that, so I'll assign it a utility of +9. The worst outcome is if it rains and I don't take my umbrella; I'll assign it a utility of -10. The outcome in which it rains and I do take my umbrella is somewhere in the middle, so I'll assign it a utility of 0.

These utilities can be expressed in a table:

The numbers provide an indication of my feelings towards the various possible outcomes. Some readers may be skeptical that precise figures can be assigned to subjective feelings like this. However, the precise values of these figures are not important; all that is important is their relative values. It is clear from these figures that in fine weather I prefer not to have to carry an umbrella, but only slightly, and that I have a fairly strong preference for fine weather over rainy weather. I could equally well have used other numbers to express these preferences. It is usual to use positive numbers for good outcomes and negative numbers for bad outcomes, but this is not necessary.

So should I take my umbrella? Well, it depends on how likely it is that it will rain today. Suppose the weather forecast tells me that there is a 20% chance of rain today. Then I can calculate the expected utility of taking my umbrella; it is (0 x 1/5) + (9 x 4/5) = 7.2. Similarly, the expected utility of not taking my umbrella is (-10 x 1/5) + (10 x 4/5) = 6. Since the expected utility of taking my umbrella is greater, I should take my umbrella. Again, notice that the absolute value of these numbers is unimportant; only their relative value is significant.

Strictly speaking, the conclusion that I should take my umbrella only follows under the assumption that I want to maximize my expected utility. Some people take this as a descriptive principle about humanity; it is a true description of human beings that they want to maximize their expected utility. Others take it as a normative principle about rationality; if you are rational, you should attempt to maximize your expected utility. Still others subscribe to neither of these principles.

Still, whether or not either of these principles hold, it is uncontroversial that there are circumstances in which people want to maximize their expected utility--for example, when I am deciding whether or not to take my umbrella. I may not explicitly go through the above calculation, but something like it goes through my mind. If the chance of rain is high enough, I take my umbrella because I don't want to get wet, and otherwise I leave it at home because I can't be bothered carrying it.

Nobody needs an expected utility calculation to tell them whether or not to take an umbrella, but they can be help in situations where more is at stake. For example, suppose you are considering whether to have your child vaccinated against whooping cough. The whooping cough vaccination protects children from a potentially fatal disease, and also has some rare but serious side-effects. Let us suppose that unvaccinated children have a 1 in 50,000 chance of dying of whooping cough, which is reduced to 1 in 1,000,000 by vaccination. Also, suppose that the vaccination carries a 1 in 200,000 chance of causing permanent brain damage. (If you were to carry out this calculation seriously, you would first need to find some reliable estimates for these probabilities; my figures are not reliable!)

To carry out the calculation, you need to assign utilities to the possible outcomes. Suppose you assign a utility of -10 to your child's death, -8 to permanent brain damage, and 0 to normal health. (Remember that the absolute size of these numbers is arbitrary; only their relative size matters. A utility of -10 in this example clearly doesn't mean the same as a utility of -10 in the previous example!) Then the expected utility of vaccinating your child is (-10 x 1/1,000,000) + (-8 x 1/200,000) = -1/20,000. The expected utility of not vaccinating your child is -10 x 1/50,000 = -1/5,000. Since the expected utility of not vaccinating your child is lower (a larger negative number), then to maximize your expected utility you should vaccinate your child.

Fred is short-sighted, and he is considering laser surgery to correct his vision. He does some research, and finds that the chance of a complete correction is 46%, the chance of a partial correction is 44%, the chance of no change in vision is 9% and the chance of a worsening of vision of 1%. Fred assigns a utility of +5 to achieving a complete vision correction, +2 to a partial correction, 0 to no change in vision, and -10 to a worsening of vision. He also assigns a utility of -2 to the cost and physical discomfort of the operation. Assuming Fred wants to maximize his expected utility, should he have the operation?

§2. Examples and fallacies

There are many pitfalls in reasoning about risks. See if you can tell which of the following arguments are correct, and which are fallacies.

- The life expectancy in Hong Kong is 77 years for men and 83 years for women. So a man who is forty years old today can expect to live another 37 years, and a woman of the same age can expect to live another 43 years. Correct or fallacy?

- The death rate from cancer in Hong Kong has more than doubled from 73 per 100,000 population in 1961 to 160 per 100,000 population in 1999. So living in Hong Kong has become more unhealthy in recent years. Correct or fallacy?

- Between May 1995 and October 1996, 14 people in Britain died of CJD (Creutzfeldt-Jakob disease), which may have been caused by eating beef infected with BSE (bovine spongiform encephalitis, or "mad cow disease"). The utility of dying of this disease is very low! The utility of eating beef is at most only very slightly higher than the utility of eating pork or chicken. Hence those British people who continued to eat beef after 1996 were acting irrationally, since they were lowering their expected utility. The British government eventually banned the sale of certain beef products. This ban can be justified in terms of maximizing the overall expected utility of British people. Correct or fallacy?

- Insurance companies make a profit on all the kinds of policy they offer, so the expected value of buying a travel insurance policy is negative. For the average traveller, then, if you want to minimize your expected financial losses, you shouldn't buy insurance. Correct or fallacy?

- According to Christianity, if you believe in God and live your life as a Christian, you will be rewarded with an eternal life of bliss (heaven). Heaven has an extremely high, perhaps infinite, utility. On the other hand, if there is no afterlife, your utility after death will be zero (since you no longer exist!). Consequently, the expected utility of being a Christian is given by the utility of heaven multiplied by the probability that heaven exists. The expected utility of not being a Christian is at most zero; whether or not heaven exists, you don't go. So however small the probability is that heaven exists, the expected utility of being a Christian is greater (perhaps infinitely greater) than the expected utility of not being a Christian. So if you want to maximize your expected utility, you should be a Christian. Correct or fallacy?